Teaching and Learning

Revolutionizing Education: Exploring Generative AI in Schools and Teacher Education

Dr. Alec Couros is a distinguished professor of Educational Technology and Media with the Faculty of Education and the Director of the Centre for Teaching and Learning at the University of Regina. He is an internationally recognized leader, innovator, and speaker who has given hundreds of keynotes and workshops around the globe on diverse topics such as open education, networked learning, digital citizenship, and critical media literacy. Dr. Couros has received numerous awards for innovation in teaching and learning. Dr. Couros has been actively developing concrete examples for integrating artificial intelligence (AI) into education and has delivered several talks on AI in teaching and learning.

In this interview, Alec will share his perspectives on navigating the complexities of integrating GenAI in schools and teacher education, the ethical considerations, and the strategies for maximizing its benefits while addressing its limitations.

Question: Universities across Canada have varied approaches to the use of generative AI tools in teaching and learning, as it presents both opportunities and risks. In the setting of our teacher education programs and with the essential skills future educators need to have in mind, what would you think to be an appropriate approach in this specific domain?

Dr. Couros: The University of Regina has formed a Working Group on Generative AI in Teaching and Learning. This group has been tasked with establishing guidelines for the appropriate and responsible use of AI as it relates to University of Regina (U of R) coursework. The initial work of the committee, Generative AI at the U of R – Guidelines for faculty and instructor, has been made available at the Centre for Teaching and Learning website. There are also sample syllabus statements that help instructors put in syllabus statements that help students better understand how they might use AI in a way that is condoned by the instructor.

For more information, visit the Centre for Teaching & Learning website.

U of R students and staff have access to Microsoft Co-Pilot, an AI-powered tool. This is an institutional tool that students and staff log in with their U of R credentials. One of the advantages of it is that the queries and prompts that the students use do not go back into the training, so they're not reused. It is more confidential in that respect.

AI is pervasive in tools like Office 365, Google Docs, Grammarly, Facebook, Snapchat, and many more. Its integration into workflows is seamless, often leading users to overlook its presence.

If we look at the Grade K to 12 school setting, we see the potential of AI in freeing teachers from numerous daily administrative tasks. However, this does not mean that we can hire fewer teachers.

Instead, the focus should be on how we can enrich and revitalize our education, and how AI can help us gain time and allow us to spend more time with our students, sitting down to learn together. AI can augment those relationships, rather than replace them.

In our Teachers Education Program here at the University, there should be a greater emphasis on skills and literacies development. Space should be made available for future teachers to learn how to collaborate with AI to enhance our expertise, enhance our relation skills, and automate some of the mundane tasks that do not demand the same level of human involvement. We must make sure that students who come through our program are digitally literate, information literate, and certainly AI literate. By revitalizing Educational Technology programs, we can ensure that future educators are equipped with the necessary skills and knowledge to effectively into teaching and learning practice.

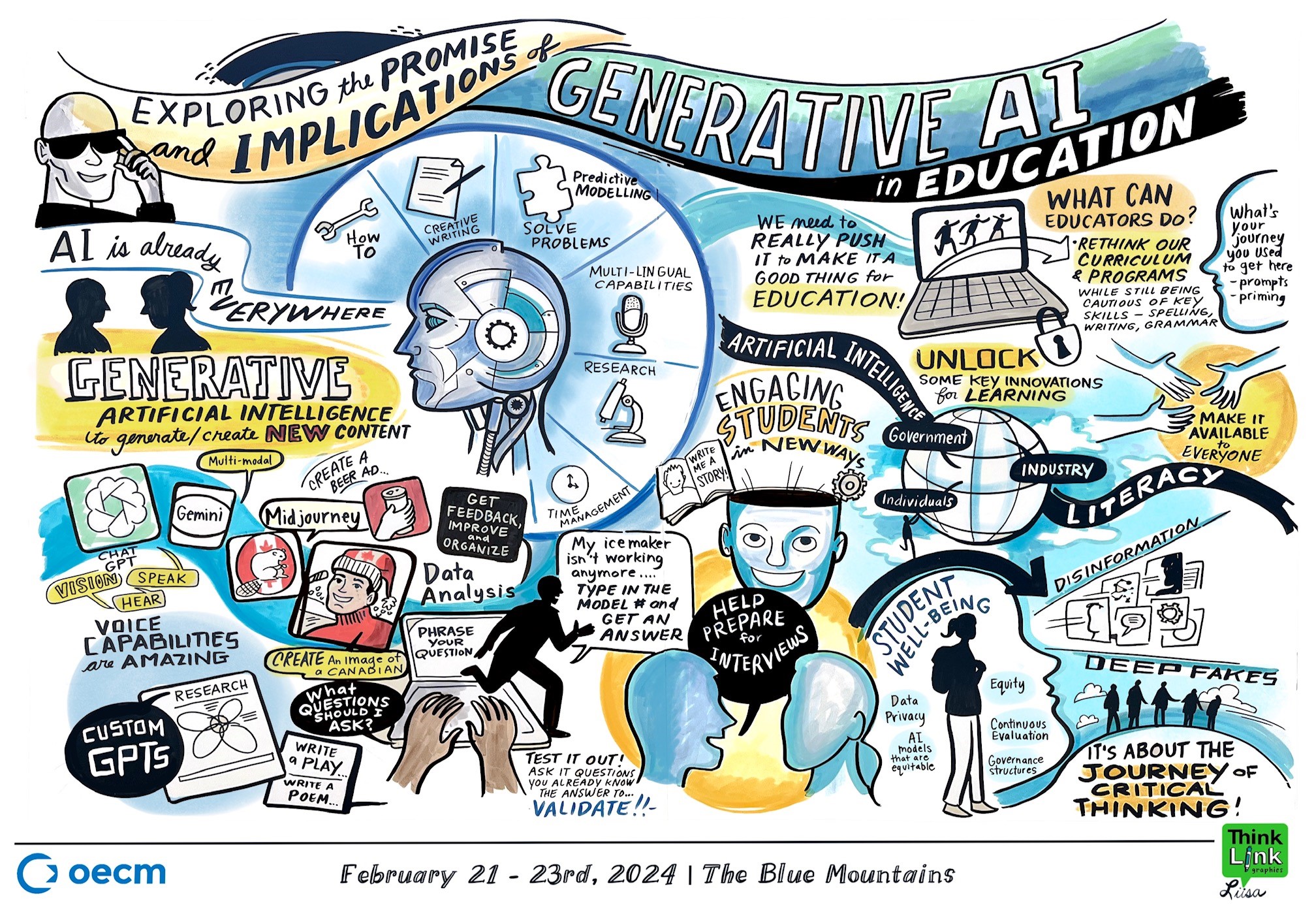

Caption: An infographic Dr. Couros shared on social media. The infographic was based on a presentation Dr. Couros delivered at the 3rd Leadership & Collaboration Invitational Symposium hosted by OECM on February 21 - 23, 2024

Photo courtesy of Dr. Alec Couros

Question: Many institutions struggle to implement AI at scale and adopt consistent policies, and finding the right approach is not a small undertaking. If Generative AI is going to change how work gets done, how should institutions position themselves to harness the transformative potential of generative AI while navigating its complexities responsibly and ethically?

Dr. Couros: First, there needs to be an acceptance of the coming reality. People have to understand how pressing these changes are in all industries.

Second, once we’re better able to accept and anticipate these changes, we need to develop a shared vision for what these changes will entail.

From here, we need reform at all stages. How we teach. What we teach. The structure of courses and programs. We need to rethink what students need to learn and how faculty will interact with learners.

"Responsible AI use entails navigating boundaries related to privacy, bias, transparency, safety, accountability, and human oversight." - Dr. Alec Couros

If you are writing a letter to parents to communicate administrative matters, I think it is fine to use AI as a first draft as long as you are not putting up the students’ names and other personal information into ChatGPT. Ultimately, when you send it, you bear all responsibility for that letter. If you generate it and send it without looking at it, that’s unethical and you are going to run into problems. A good example here is that Air Canada lost a court case against a grieving passenger when it tried and failed to disavow its AI-powered chatbot.

When it comes to academic work, it is important that you are transparent. Students have to obtain explicit permission from their instructors to use a AI-powered chatbot. They have to be transparent in terms of how they used it, what prompts they used, how much they improved it, what parts did they use it for.

There are also occasions when we shouldn’t use it. For instance, if you are writing a really personal treaty land acknowledgment, that’s not something that an AI should do first, because it should come from your soul or your heart. That’s something that needs to be much more of a human process than an AI process.

Question: In a panel discussion “Generative AI + Education: Reinventing the Teaching Experience”, Melissa Webster, Lecturer at Massachusetts Institute of Technology (MIT) Sloan School of Management discussed her experiments while she adopted GenAI into her CIM courses (writing intensive management communication and communicating with data course). When she asked herself, what workplace she is preparing her students for, she concluded that for her students to be competitive in the workplace, they are going to need to know how to use these GenAI tools. She threw out the existing assignment, then she reinvented a set of assignments, such as creating a cover letter using ChatGPT, then critiquing these works, and how they think generative AI is going to play out in this situation. She found the students who got the best cover letter out of ChatGPT were those who were the best writers in the class. She also tasked her students to use ChatGPT or other generative AI on more assignments, where students get to decide how to use it. The rubric remains unchanged. Students later have to reflect on how they used it, what worked well, what did not work well, and what they learned. Being able to tell what is wrong and able to fix it is the goal. She was happy about this shift from the mechanics of writing when the tool could be. She also admitted that revamping does not happen in one round; it is going to take multiple times.

What is your opinion on this example of an educator redesigning how they teach in an intensive writing course?

Dr. Couros: I think that anything that challenges what and how we teach to this extent is very welcome, and it’s certainly one of the things I like about Generative AI.

"It challenges us. It will force us to change, and for the better in many cases. Teaching and learning will benefit and it’s long overdue." - Dr. Alec Couros

In terms of the assignment itself, I think these types of assignments will work well at this level at least for the interim. This is a somewhat transitional pedagogy. For students to critique what AI creates is easier now than it may be in the future. To do this well, you need to be able to write. In the future, if we do not have students writing essays, they are not going to be able to critique to the same extent. If they aren’t able to write, such exercises won’t be as possible.

Writing is something that you develop like a muscle. By practicing, you become a better writer. While critique of articles does help, it doesn’t always hit the same area.

When it comes to lesson plan development, if you do not understand a basic lesson, you won’t be able to critique whether a lesson plan generated by ChatGPT is good or not. Some of such knowledge comes with writing lesson plans and some of it comes with the experience of executing those lessons in the classrooms.

In the Disney movie, WALL-E, people are depicted as overly dependent on assistive technology to the point that they’ve lost touch with the natural world and are unable to move without the assistance of hoverchairs. It is an example where people's reliance on technology leads to a loss of essential human skills and independence.

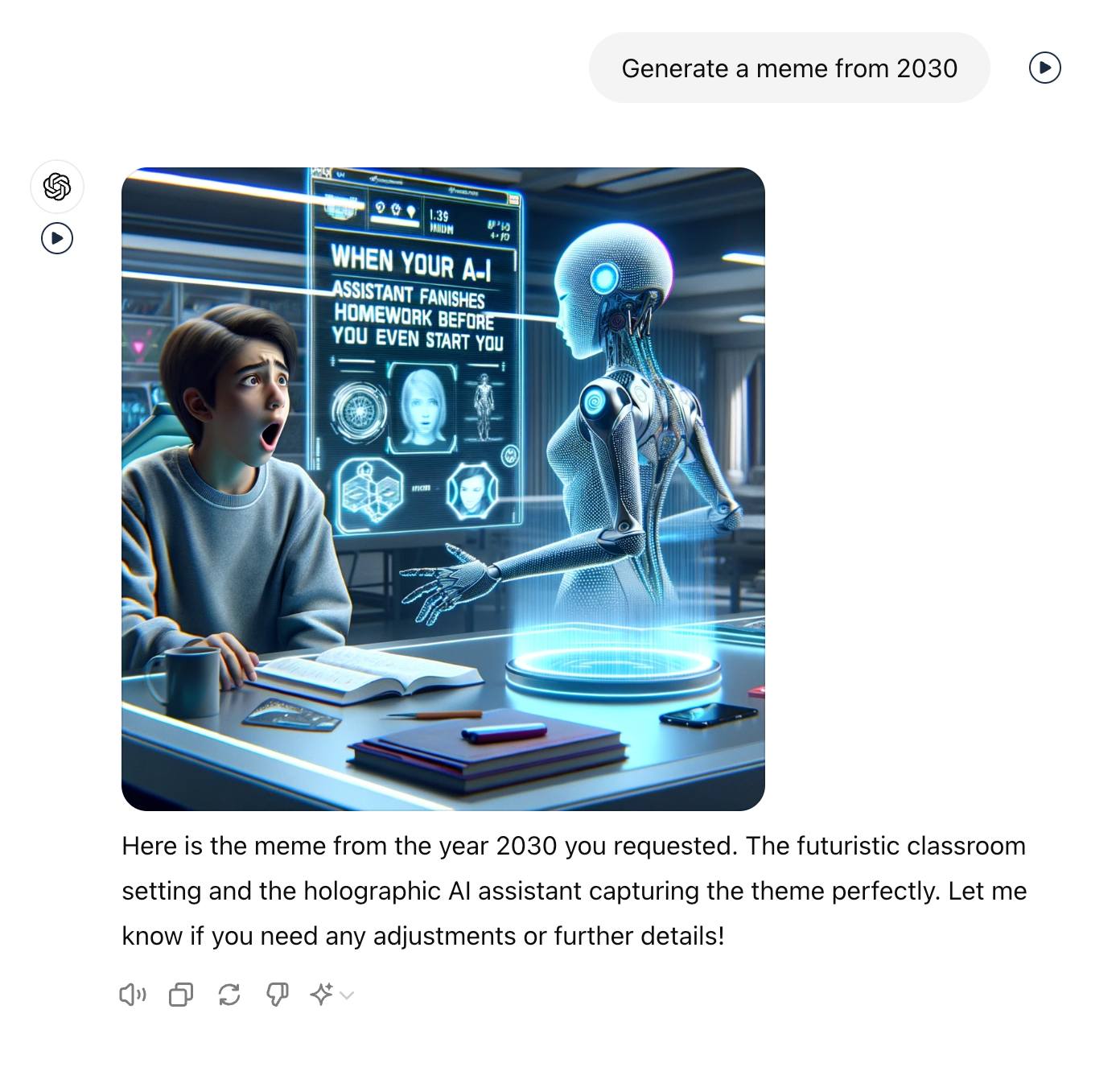

Caption: A ChatGPT prompt Dr. Couros shared on social media

Photo courtesy of Dr. Alec Couros

Question: How do instructors decide whether GenAI is something they would use in their specific field/discipline? Does it work equally well in math, physics, language, and arts? Is there a thinking process or methodology to follow to help them find the answer? As the Director of the Centre for Teaching and Learning at the University of Regina, what are some of the approaches you would like to offer to them?

Dr. Couros: This is a difficult question to answer as even the determinants for these changes are also subject-specific. Some of this will depend upon our need for a working knowledge of the subject matter and how much math we need to do to be able to critique what the AI bot produces. Some of this, especially in the social sciences, may depend upon what we feel is needed to simply produce ethical, caring, kind citizens. In writing and languages, there are many developmental questions that will determine what is necessary to know.

Every discipline will have much to discuss as these changes roll out.

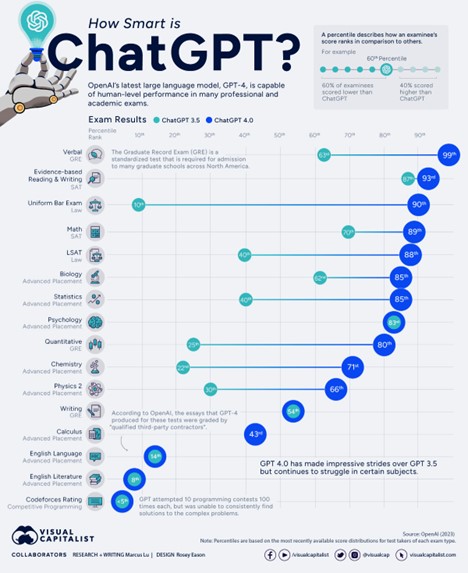

In a technical report released on March 27, 2023, OpenAI provided a comparative report of how GPT-4 surpasses GPT-3.5 in a range of academic exams. In AP chemistry, it went from the 22nd percentile to the 71st percentile. However, it is still not very good at AP Calculas, and English Literature.

Caption: ChatGPT's performance in human exams

Source: OpenAI (2023)

Over time, artificial intelligence is going to catch up on logical reasoning, problem-solving, and even social and emotional understanding.

Question: If the conclusion to the above question is positive, what is the course of action for instructors to rethink the goals for learning, revise assignments/rubrics, and redesign their Teaching and Learning practices?

Dr. Couros: There will be a need to become de-siloed within our program, subject areas, and fields in general. We can certainly make many individual changes in our courses, but we must understand how these changes might ripple through our programs. This is one of the reasons curriculum mapping activities are important when creating change in our programs.

For instance, students may have come into the Math Teacher Education program but have not taken any advanced math courses before. The instructor will have to determine what they need to know to be able to teach Math, both the pedagogical aspect and the subject area aspect. Another question is if AI could do well in some situations, do we have the same need to do these? To critique the work done by AI, how much do we need to know?

There are curriculum writers who help people better understand what they need to know first to be able to know the next. Without prerequisite knowledge, it might be difficult for a learner to move on to the next level.

Learn more about the Faculty of Education's Teacher Education Programs.

Question: Instead of getting into the game of chasing cheaters, what is the advice you would like to give to instructors to prevent the misuse of ChatGPT and other generative AI in academic work? If you’d like to comment on the following:

- being very transparent and clear as to whether the use of AI is permitted and any given assessment.

- laying out the boundaries for using generative AI in coursework.

- helping students to understand the mechanism and limitations of GenAI.

- fostering student conversations regarding these emerging tools.

- providing sample statements for students to communicate how they have used AI in their work.

Dr. Couros: All the above are very good objectives. Perhaps the one part that is missing is the “Why” and especially, convincing students of the “why” it matters to actually do the work itself why it matters to learn the subject area at hand and the disadvantages of taking shortcuts.

While we are doing this, we must also reconsider why students “cheat” in the first place and how much of this compulsion has to do with the structures that we’ve created and continue to uphold (e.g., competitiveness, one-size-fits-all education, social and economic divides, ranking and sorting kids).

Also, AI detectors do not work well. They're not reliable and often will disproportionately affect non-English language speakers. There are hundreds of studies around that.

When it comes to misconduct investigations, professors are advised to take further scrutiny and human judgment in conjunction.

The most famous example of false positives is that both the U.S. Constitution and the Old Testament were “detected” as 100% AI-generated.

Therefore, using these unreliable tools to fail students is unethical.

Question: How do educators adapt themselves? How could they use/collaborate with GenAI? What are the supports made available to them?

Dr. Couros: There are supports everywhere. Social media groups. Newsletters. Peer learning/observation.

At the Centre for Teaching and Learning, we will continue to offer many AI-related offerings and we have initiated a GenAI CoP.

AI tools that I enjoyed exploring include HeyGen for video translations, Midjourney for images, Perplexity for research assistance, ChatGPT Pro, Bing, Google Bard, Synthesia to create avatars, WellSaid Labs for voice generation, and Tome for small presentations.

Caption: An AI-generated avatar Dr. Couros shared on social media

Photo courtesy of Dr. Alec Couros

Question: What are the limitations of Generative AI that people should be aware of when they take steps to maximize its benefits for teaching and learning, i.e. data bias, algorithmic bias, ethical considerations, trustworthiness, and reliability?

Dr. Couros: Non-trustworthy information (hallucinations).

AI-content will never really be detectable, consistently, and AI-detectors disproportionality detect the writing of non-native English speakers as AI-generated.

Another issue is that because these large language models learn from training data, every time that you upload an AI-generated content to the web, the AI learns from that content. It will learn from the content that is not necessarily accurate. There will be a decay of the quality of content over time. It is called Model Autophagy Disorder (MAD).

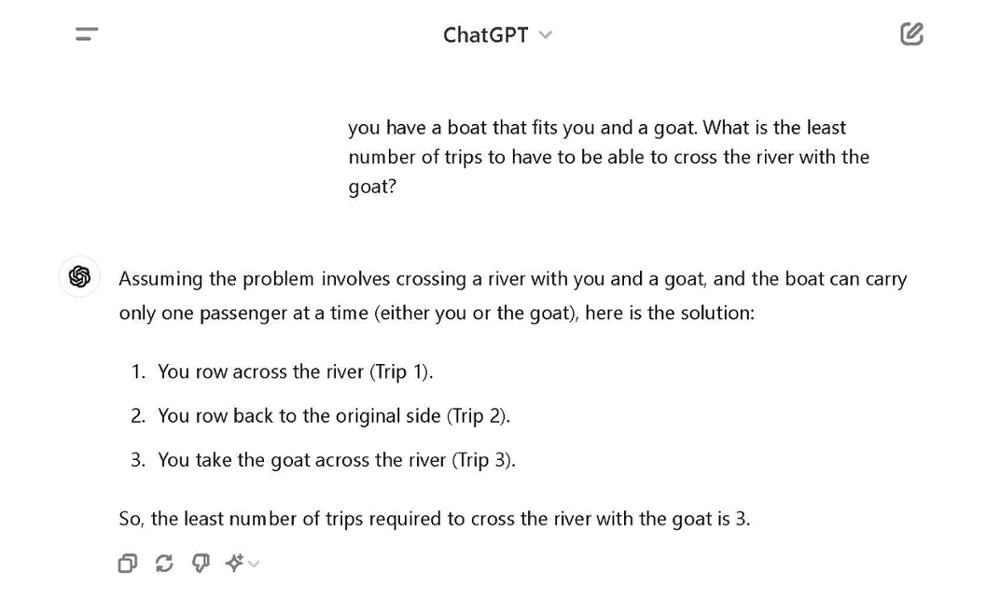

Every once in a while, I try to figure out a way that I can trick it. If you ask ChatGPT the river crossing problem: You have a boat that fits you and a goat. What is the least number of trips to have to be able to cross the river with the goat? It will say you need two trips. Even a five-year-old child would know you only need one trip.

I excluded the fox, chicken and a sack of corn in this question, as I wanted it to think about that other analogy. However, because it knows about this other question, it does not know how to answer this question anymore.

Caption: ChatGPT 3.5 answering the river crossing problem

Source: ChatGPT 3.5

Also, unlike humans, AI does not share the same ethics and values. There’s a lack of common sense and moral intuition in AI.

In conclusion, educators can harness the full potential of Generative AI to enrich teaching and empower students for the challenges of tomorrow, while preserving the invaluable human element of teaching. Transparency, clear boundaries, and ongoing dialogue with students are paramount.